12 Types of MVP: A Practical Guide to MVP Models for Validation

Key Takeaways

- MVP = learning tool, not a “small launch.” Build the minimum that tests your core hypothesis with real users—scope is minimal, but quality must stay solid.

- Start with the riskiest assumption. Choose an MVP type based on what you need to validate first: demand, value, usability, operations, or technical feasibility.

- Low-fidelity vs high-fidelity matters. Low-fidelity MVPs validate interest fast and cheap; high-fidelity MVPs validate real behavior through working experiences.

- Pick the right model from 12 MVP types. Examples: Landing Page, Email, Fake Door, Prototype, Demo Video, Crowdfunding (low-fi) and Single Feature, MLP, Concierge, Wizard of Oz, Piecemeal (high-fi).

- Measure success with learning metrics. Focus on activation, retention, willingness to pay, task completion, and qualitative feedback—avoid vanity metrics like raw signups alone.

Launching a product without validation is like building a bridge without testing the foundation. Most likely, you will waste time, money, and momentum on features nobody wants.

A minimum viable product changes that equation. Instead of building everything upfront, you test your riskiest assumptions first with real users.

A minimum viable product (MVP) is the smallest version of a product that delivers enough value to early users while generating validated learning about customer needs and product direction.

This guide covers 12 types of MVPs split between low-fidelity and high-fidelity approaches. Each model serves different hypotheses, budgets, and timelines. By the end, you will know which MVP type fits your validation needs and how to measure success.

Companies that validate early reduce development costs by up to 60% and avoid building features users never touch. Those who skip validation often discover mistakes after launch when pivoting becomes too expensive.

What is a minimum viable product (MVP)?

A minimum viable product is the simplest version of a product that allows you to test a core hypothesis with real users while minimizing development effort and cost.

Coined by Eric Ries in The Lean Startup, the MVP concept revolutionized product development. Traditional methods required building complete products before getting user feedback. MVPs flip this model by prioritizing learning over features.

Think of an MVP as an experiment, not a product release. You build just enough to test whether your fundamental assumptions are correct. Does the problem you are solving actually exist? Will users pay for your solution?

An MVP is not a prototype, a beta version, or a poorly built product. Prototypes test design concepts without delivering real value. Beta versions are nearly complete products. MVPs sit between these extremes, delivering enough value to attract early users while remaining focused on specific learning goals.

MVP development focuses on reducing risk by testing hypotheses with minimal investment.

What an MVP is NOT:

- A broken or incomplete product shipped to save time

- A prototype without real user value

- A beta version missing a few features

- An excuse for poor quality or user experience

The minimum refers to scope, not quality. You deliver fewer features, but those features work reliably and solve a real problem.

Why is an MVP important to use in product development?

Building products without validation leads to predictable failures. Teams spend months developing features nobody uses and solve problems that do not exist.

An MVP interrupts this cycle by inserting evidence into your decision-making process.

Risk Reduction

Every unvalidated assumption carries risk. An MVP tests these assumptions early when changes cost less.

Faster Time to Market

Full product builds take months. MVPs launch in weeks, letting you claim market position while competitors plan.

Cost Efficiency

Building less means spending less. Custom software development projects starting with MVP validation spend 40-60% less on unnecessary features.

Evidence-Based Decisions

MVPs replace speculation with metrics and real user data.

Avoid Overbuilding

Most products ship with features nobody uses. MVPs prevent this waste by validating demand first.

Dropbox created a demo video showing the concept. The video generated 75,000 signups overnight, validating demand before writing production code. That single MVP saved months of development.

This approach works across industries. Whether building AI solutions, mobile apps, or enterprise software, validation reduces wasted effort.

Who are the ideal users for an MVP?

Not everyone tolerates incomplete products. Your MVP needs users who understand they are participating in validation, not buying a finished solution.

Early adopters represent your ideal MVP audience. These users actively seek new solutions to existing problems. They tolerate rough edges because they value being first and influencing product direction.

Problem-aware users know they have a pain point and are searching for solutions. They forgive missing features if your MVP solves their core issue better than alternatives.

MVP users must accept limited features, potential bugs, changes based on feedback, and direct involvement in shaping the product.

B2B vs B2C Differences

Business users approach MVPs differently than consumers. B2B early adopters often have budget authority and provide structured feedback. Consumer early adopters move faster but churn quicker.

Who to Avoid

Mass market users expect polish and comprehensive features. Launching an MVP to this audience damages your brand and generates misleading feedback.

Your MVP should target the smallest viable audience that can validate your core hypothesis.

What differentiates an MVP from a full product launch?

The difference extends beyond feature count. Each approach serves different goals, targets different audiences, and measures success differently.

MVPs prioritize speed and learning. You ship quickly, gather data, and iterate based on evidence. Success means validating your core hypothesis, not hitting revenue targets.

Full product launches prioritize completeness and scale. Success means market penetration and sustainable operations.

The investment difference matters. An MVP might cost $15,000-$50,000 and take 6-12 weeks. The same product as a full launch could require $150,000-$500,000 and 9-18 months.

Launch an MVP when testing assumptions. Launch a full product when you have validated demand and understand your market.

What is the difference between low-fidelity and high-fidelity MVPs?

MVP approaches split into two categories based on how closely they simulate the final product experience.

Low-fidelity MVPs validate interest and demand without building functional software. These approaches test whether users want your solution before development investment. Think landing pages, demo videos, or email campaigns.

High-fidelity MVPs simulate real product usage and collect behavioral data. Users interact with working features and experience actual value.

Low-fidelity approaches let you test multiple hypotheses for less than one high-fidelity build. However, they only capture stated preferences. High-fidelity MVPs reveal what people do, not what they say.

The right choice depends on your biggest unknown. Testing demand? Start low-fidelity. Testing usability? Build high-fidelity.

Ontik Technology's agile development process supports both approaches, helping teams choose the right fidelity level and transition smoothly from validation to production.

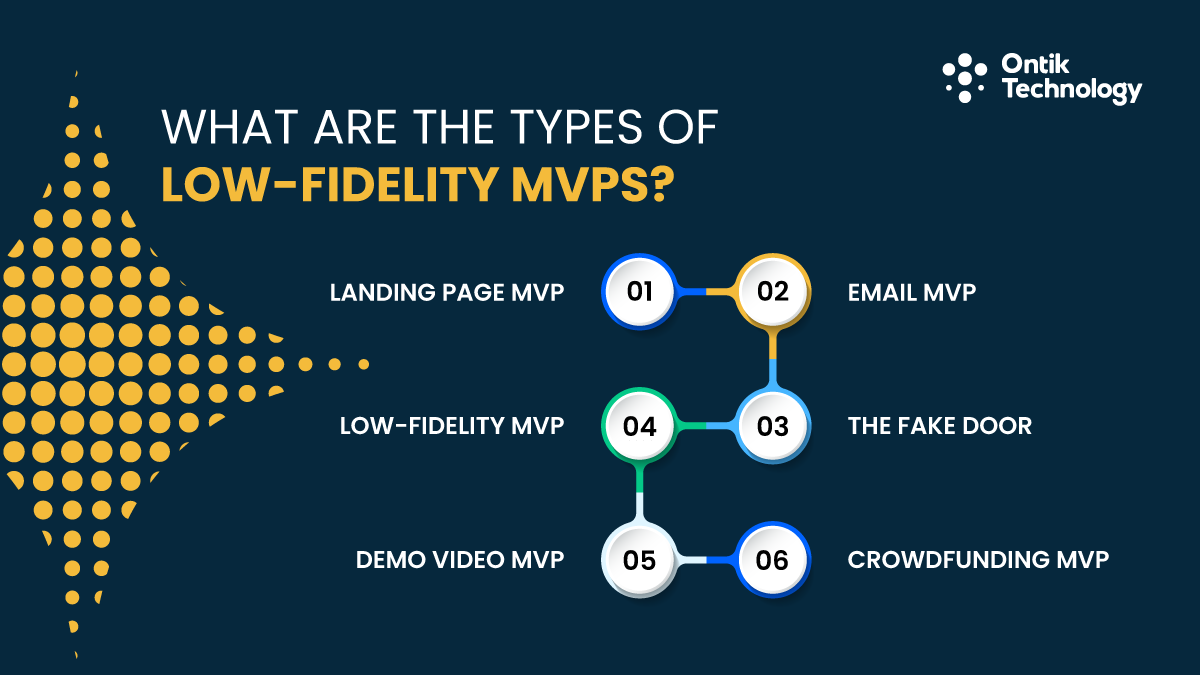

What are the types of Low-Fidelity MVPs?

Low-fidelity MVPs validate demand or interest without building functional software. These approaches test whether users want your solution before you invest in development.

Low-fidelity models share some clear advantages. They cost less, launch faster, and let you test multiple variations at the same time. The tradeoff is limited learning depth because you only capture interest rather than actual behavior.

Each low-fidelity MVP type tests different hypotheses and generates different kinds of insights. Picking the right approach depends on what you need to learn and how quickly you need those answers.

1 Landing Page MVP: What value proposition will you communicate on the landing page?

A landing page MVP presents your product concept through a single webpage explaining the problem, solution, and value proposition. Visitors can sign up for early access or pre-order.

Primary Hypothesis Tested: Will people express interest in this solution?

You create a compelling page, drive traffic, and measure signup rates. High conversion suggests genuine interest. Low rates indicate problems with your value proposition.

Metrics to Track:

- Landing page conversion rate (target: 15-40%)

- Email signup quality

- Traffic source effectiveness

- Time on page and scroll depth

When to Use

Choose landing pages for quick demand validation with minimal investment. This works well for products solving clear pain points.

Buffer used a landing page MVP before building their social media scheduling tool. Within days, they had hundreds of interested users validating demand before writing code.

2 Email MVP: Who will receive your MVP emails?

An email MVP delivers your product value through structured email campaigns instead of software. You manually provide the service via email to test whether users find value.

Primary Hypothesis Tested: Do users value this service enough to engage repeatedly?

You send emails containing the value you plan to automate later. User engagement validates whether the core value proposition resonates.

Metrics to Track:

- Email open rates (benchmark: 20-30%)

- Click-through rates

- Response rates

- Subscriber retention over time

When to Use

Email MVPs suit services delivering periodic value. News curation, personalized recommendations, or advisory services work well in email format.

A startup planning an AI-powered job matching platform could manually curate job listings and email them to subscribers. High engagement validates the value proposition before automating.

3 The Fake Door: What feature interest are you measuring with a fake door test?

A fake door MVP shows users a feature that does not yet exist. When users click, you collect their information and explain the feature is coming soon.

Primary Hypothesis Tested: Which features generate the most user interest?

You add buttons or menu items for features you might build. Click-through rates reveal which features users actually want.

Metrics to Track:

- Click-through rate on fake door elements

- User segment differences

- Conversion from interest to email signup

- Qualitative feedback

When to Use

Fake door tests work with existing user bases when prioritizing your roadmap.

Amazon used fake doors to test new product categories before investing in inventory. They measured clicks and only stocked products generating sufficient interest.

Always inform users immediately that the feature is unavailable and give them a way to express interest.

4 Low-Fidelity Prototype: What parts of the product will the prototype illustrate?

A low-fidelity prototype uses basic mockups or clickable designs to simulate product flow without functional code. Users interact with visual representations to test navigation and workflows.

Primary Hypothesis Tested: Can users understand and navigate the intended product experience?

Metrics to Track:

- Task completion rates

- Time to complete workflows

- Points where users get confused

- Feature discovery rates

When to Use

Low-fidelity prototypes work when testing information architecture or interface concepts for complex products.

A mobile app development project might create clickable prototypes testing three navigation patterns. User testing reveals which feels most intuitive before developers write code.

5 Demo Video MVP: What story or value proposition will the demo video communicate?

A demo video MVP presents your product concept through video showing how it works and what value it delivers.

Primary Hypothesis Tested: Does the product concept resonate strongly enough to drive action?

The video explains the problem, demonstrates the solution, and shows user transformation. You measure interest through views, engagement, and conversions.

Metrics to Track:

- Video completion rate (target: 50%+)

- Conversion from view to signup

- Social sharing and organic reach

- Comments and qualitative feedback

When to Use

Demo videos work for products with complex value propositions easier to show than explain.

Dropbox's demo video generated 75,000 signups overnight, validating massive demand before building cloud infrastructure.

Video quality matters less than clarity of the value proposition.

6 Crowdfunding MVP: What funds must be raised to begin production?

A crowdfunding MVP validates demand by asking users to pre-pay for your product before it exists. You set funding goals and measure interest through financial commitments.

Primary Hypothesis Tested: Will users pay for this product before it exists?

Successful campaigns prove demand and generate capital. Failed campaigns reveal weak value propositions without wasting development resources.

Metrics to Track:

- Funding percentage achieved

- Backer count and average pledge

- Conversion rate from page view to pledge

- Backer demographics

When to Use

Crowdfunding works for physical products or consumer electronics where visual presentation drives interest.

Pebble smartwatch raised over $10 million on Kickstarter, proving consumer interest before Apple or Samsung entered the market.

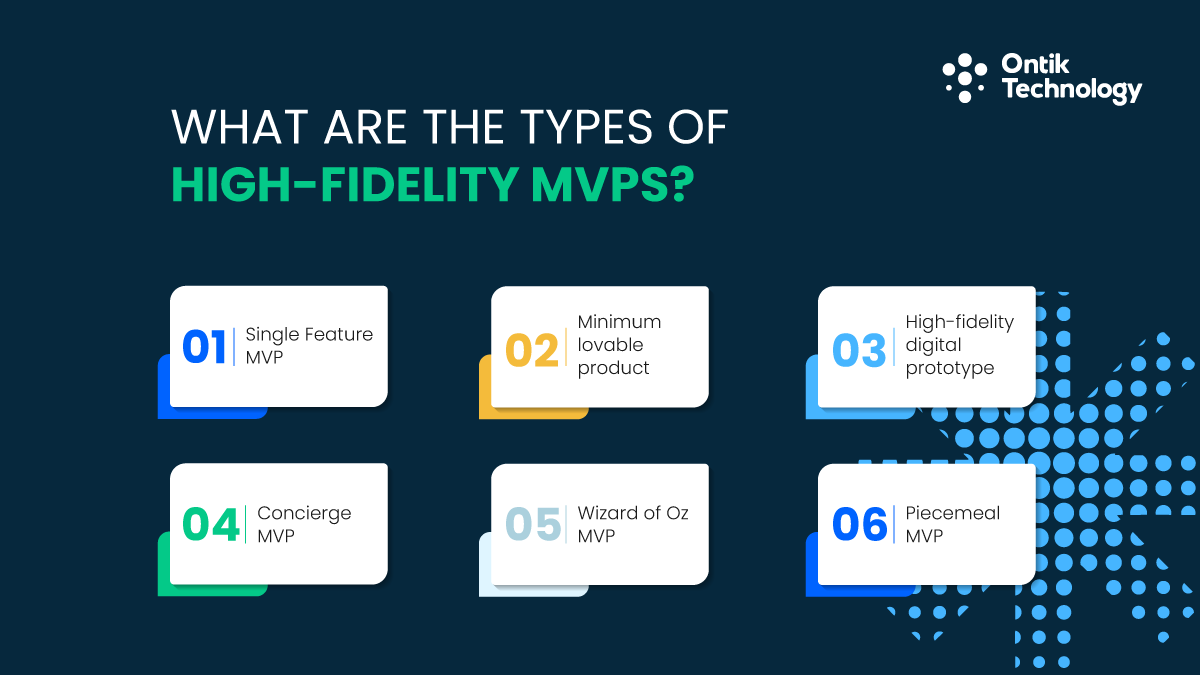

What are the types of High-Fidelity MVPs?

High-fidelity MVPs simulate real product usage and collect behavioral data. Users interact with working features, complete tasks, and experience actual value delivery.

These approaches cost more but generate deeper insights. You learn what users actually do, not just what they say.

7 Single Feature MVP: Which feature is most critical to the product's value?

A single feature MVP builds one core capability that delivers your primary value proposition. You deliberately exclude supporting features to focus all your resources on making the most critical functionality work really well.

What is Built

You develop the feature that solves the main problem or delivers the biggest benefit. Everything else gets eliminated or handled manually behind the scenes. This approach requires identifying which single feature matters most and having the discipline to ship without all the extras.

Cost vs Learning Tradeoff

Building a single feature costs way less than a full product but still requires functional development work. You invest in quality code, proper architecture, and reliable performance for that one feature. The learning comes from watching how users actually interact with your core value proposition.

Instagram started as a single feature MVP focused exclusively on photo filters. No messaging, no stories, no reels, no explore page. Just filtered photos that you could share. This tight focus let them perfect the core experience and validate demand before they added anything else.

When to Use

Pick single feature MVPs when your value proposition centers on one specific capability that changes everything. If users can achieve their main goal with just that feature, everything else becomes enhancement rather than requirement.

A web development project for a project management tool might launch with just task assignment and completion tracking. Reporting and analytics get handled manually through spreadsheets until the team validates that core workflows actually work for users.

8 Minimum Lovable Product: Which aspects make the product delightful instead of just usable?

A minimum lovable product (MLP) extends the MVP concept by adding elements creating emotional connection and delight. You build core features plus carefully chosen details making users love the experience.

What is Built

You develop essential functionality plus design touches, micro-interactions, or unique features that differentiate emotionally.

Slack launched as an MLP. Their team chat worked well, but they invested in delightful touches like emoji reactions and thoughtful empty states. These details created emotional attachment driving adoption.

When to Use

Choose MLPs when entering competitive markets where functionality alone does not differentiate you.

9 High-Fidelity Digital Prototype: How close is the prototype to the final product experience?

A high-fidelity digital prototype looks and feels like a finished product but runs on simplified backend systems or simulated data. Users interact with realistic interfaces while you manually manage processes that would eventually get automated.

What is Built

You create polished front-end interfaces and user flows using real design systems and interaction patterns. The backend might use basic databases and APIs, but complex logic gets simplified or handled manually behind the scenes.

Cost vs Learning Tradeoff

High-fidelity prototypes cost less than full products because you skip complex architecture and edge case handling. You learn about interface usability, workflow effectiveness, and feature priority without building complete systems that might need changing.

Example

A fintech startup building a business intelligence platform might create a high-fidelity prototype with real dashboards and data visualizations. Behind the scenes, they manually process data uploads and run analyses instead of building automated ETL pipelines. Users experience realistic interfaces while the team validates which specific analyses actually matter most.

This approach works especially well for UI/UX design validation where visual polish and interaction patterns significantly impact user perception and behavior.

Top 10 Concierge MVP: How will learnings be converted into automated features?

A concierge MVP delivers your product value through manual, white-glove service before you automate anything. You personally guide each user through workflows, manually perform operations, and deliver results as if software handled everything automatically.

What is Built

You might build basic interfaces for user input and output display, but core processing happens completely manually. This lets you test value delivery and refine workflows before investing thousands in automation.

Manual vs Automated

Everything starts manual. You personally handle requests, process data, generate results, and deliver output. As clear patterns emerge, you selectively automate the most common workflows while continuing manual service for edge cases that happen less often.

Example

Food on the Table started as a concierge MVP. The founder personally called grocery stores to check prices, created meal plans by hand for each family, and emailed shopping lists to subscribers every week. This completely manual process validated that users actually wanted personalized meal planning before they built any automated systems.

When to Use

Pick concierge MVPs for complex services where you need to understand user needs really deeply before automating. The manual interaction generates insights that surveys and usage data completely miss.

The key advantage is learning speed. You can change workflows daily based on direct user interaction instead of waiting weeks for enough data patterns to emerge from automated systems.

Top 11 Wizard of Oz MVP: What functions appear automated but are manually executed?

A Wizard of Oz MVP presents a fully automated experience while humans manually perform operations behind the scenes.

What is Built

You build complete user interfaces that look automated. Behind this, human operators process requests and generate results manually.

Zappos used this early on. When customers ordered shoes, employees drove to stores, bought shoes with personal credit cards, and shipped them. The website looked like normal e-commerce, but no inventory system existed.

When to Use

Wizard of Oz MVPs work for testing algorithmic products like recommendations or matching before investing in complex automation systems.

Top 12 Piecemeal MVP: Which existing tools can be combined to test the product?

A piecemeal MVP combines existing tools and services to simulate your product experience without building custom software. You use third-party platforms, APIs, and off-the-shelf tools to deliver value while testing whether your concept actually works.

What is Built

Almost nothing from scratch. You connect existing services through tools like Zapier, use spreadsheets for databases, grab form builders for input, and rely on email for communication. The goal is validating your concept using tools that already exist and work.

Cost vs Learning Tradeoff

Piecemeal MVPs cost the least among high-fidelity options because you are basically renting functionality instead of building it. The tradeoff is limited customization and potentially awkward workflows as you force existing tools to serve new purposes they were not really designed for.

Example

Groupon started as a piecemeal MVP. They used WordPress for their website, manually created PDF coupons in design software, and used email to deliver deals to subscribers. No custom deal management software existed at all. This let them test whether people actually wanted daily deals before investing anything in platform development.

A modern example might combine Airtable for data storage, Typeform for surveys and forms, Zapier for connecting everything, and Mailchimp for email communications to test a completely new service model.

When to Use

Pick piecemeal MVPs when existing tools already cover most of your required functionality. This works really well for validating process-oriented products where the workflow matters way more than the specific interface or branding.

The challenge is knowing when to move from piecemeal tools to custom development. This usually happens when operational overhead exceeds the cost of building or when customer experience starts suffering from using disconnected tools that do not talk to each other well.

How to Choose the Right Type of MVP for Your Product

Selecting the right MVP type depends on your biggest unknown. Different hypotheses require different validation approaches.

Start with understanding what you need to learn. Testing demand? Use low-fidelity approaches. Testing usability? Build high-fidelity MVPs.

Budget and timeline also influence choice. Low-fidelity MVPs cost hundreds to thousands and launch in days. High-fidelity models require $15,000-$100,000 and take weeks to months.

Strategic Sequencing

Many successful products use multiple MVP types. Start with a landing page for basic demand. Progress to a demo video for deeper engagement. Build a prototype to test workflows. Launch a single feature MVP for behavioral data.

Ontik Technology's dedicated development teams help clients navigate these choices based on product type and learning objectives.

How would success be measured for your MVP?

Choosing the right metrics determines whether your MVP generates useful insights or misleading signals.

Focus on learning metrics:

Activation - What percentage complete your core workflow? High rates suggest clear value propositions.

Retention - Do users come back? Retention reveals whether your product creates lasting value or novelty interest.

Willingness to Pay - Will users convert to paid plans? Measure intent through questions or pre-order requests.

Task Completion - Can users accomplish their goals? High abandonment signals usability problems.

Qualitative Feedback - What do users say? Quotes from interviews often reveal insights metrics miss.

Avoid Vanity Metrics

Total signups mean nothing without activation. Page views do not predict revenue. These metrics feel good but do not validate your business model.

For AI MVP development projects, user trust and adoption matter more than accuracy metrics.

Set clear success criteria before launching. Define what "validated" means for each hypothesis you are testing.

How does building an MVP reduce development costs?

Traditional development builds comprehensive features based on assumptions, only discovering after launch that users wanted something different.

MVPs interrupt this waste.

Avoided Features - The average product ships features less than 10% of users touch. MVPs eliminate this waste by building only validated necessities.

Faster Pivots - Discovering mistakes after $200,000 and 12 months forces difficult decisions. MVP validation catches these when changing costs $10,000 and two months.

Reduced Engineering Hours - A single feature MVP requires 200-400 hours compared to 2,000-4,000 for full products.

Lower Technical Debt - MVPs establish validated architecture from the start, reducing major refactoring needs.

Real Cost Examples

Landing page MVP: $500-$2,000, validates in days. Concierge MVP: $5,000-$15,000, tests over weeks. Single feature MVP: $15,000-$50,000, proves fit within months.

Compare these to full development at $150,000-$500,000 over 9-18 months. MVP investment represents 3-10% while reducing complete failure risk from 70% to under 30%.

Teams using offshore staff augmentation or remote developers access even more cost-efficient validation.

Which MVP type best matches your current hypothesis?

Every product idea rests on assumptions needing testing. The right MVP depends on which assumption carries highest risk.

Demand Hypothesis - Test with landing pages, demo videos, or crowdfunding.

Value Hypothesis - Use single feature MVPs or concierge services to deliver actual value.

Usability Hypothesis - Build prototypes to test navigation and task completion.

Operational Hypothesis - Start with concierge or Wizard of Oz MVPs to understand true costs.

Technical Hypothesis - Build piecemeal or single feature implementations proving the approach works.

Ask yourself: "If this assumption is wrong, does the entire business fail?" That assumption deserves your MVP investment.

Can this MVP evolve into a long-term product?

Not all MVPs transition smoothly into scaled products. Some create technical debt requiring rebuilding.

The Evolution Path: MVP → MLP → Product

Your initial MVP validates assumptions. As you confirm demand, you evolve to a minimum lovable product adding competitive differentiation. Finally, you build the full product with comprehensive features.

Technical Debt Considerations

MVPs intentionally cut corners to maximize learning speed. The question is whether that debt is manageable or catastrophic.

Concierge and Wizard of Oz MVPs almost always require rebuilding. Single feature MVPs might evolve if built with clean architecture.

Scalability Planning

Even minimal MVPs benefit from basic scalability thinking. Use cloud infrastructure that can grow. Teams leveraging cloud solutions avoid painful migrations as they scale.

Balance speed against sustainability. Move fast enough to validate quickly but not so recklessly that success forces complete rebuilds.

Final Thoughts

The gap between idea and validated product often determines startup success. Teams that validate assumptions early build products people want. Those who skip validation waste resources on features nobody uses.

Your MVP choice shapes learning speed and resource efficiency. Start by identifying your riskiest assumption and match it to the appropriate MVP type. Measure outcomes rigorously and let evidence drive decisions.

Remember that MVPs focus on learning, not launching. Success means gathering evidence that informs your next decision, whether building more features, pivoting, or abandoning the idea.

MVP development services help teams validate ideas in weeks instead of months, reducing risk while preserving resources for features that matter. Whether you choose landing pages or concierge MVPs, commit to honest evaluation and build what users want.

Your next step is simple. Choose one hypothesis, select the appropriate MVP type, and start testing.

FAQ

Can a solo founder build an MVP without a full team?

Yes. Solo founders build MVPs using landing pages or no-code tools. Team augmentation services provide development expertise without full-time hiring.

How long does an MVP typically take to build?

Landing pages launch in 1-3 days. Low-fidelity prototypes need 2-4 weeks. High-fidelity MVPs like single feature builds take 6-12 weeks depending on complexity.

How is qualitative feedback captured during MVP testing?

Capture feedback through user interviews, embedded feedback forms, follow-up surveys, and direct observation during testing sessions asking "why" questions about actions.

What happens after an MVP validates an idea?

After validation, expand features based on feedback, improve infrastructure, transition manual processes to automation, and grow your user base beyond early adopters.

What ROI does MVP validation produce?

MVP validation reduces development costs 40-60%, prevents $100,000-$500,000 investments in unvalidated products, and shortens time to market by 6-12 months.